It has felt really great to dive in to Practical Deep Learning for Coders in earnest after several false starts over the past couple years. It has always seemed like a such a perfect resource to get established in the subject, but there are experimental educational techniques in the lessons that do really require you to buy in and commit to a large experience.

This blog is one such bought-in item, as the teacher and TAs encourage you at multiple points in the second lesson to start a blog (and actually recommend this platform, Quarto.) We’ll talk through the assignment of the second lesson, building my own small image classification app, and what I thought of the stuff I came across.

Lesson 1

I didn’t spent too much time on lesson 1, since I’m already familiar with most of its subject matter after working through Andrew Ng’s Machine Learning course and doing some other ML stuff. However it was still quite helpful. I enjoyed seeing the evolution of the domain over time, as only someone with decades of experience in the field could do.

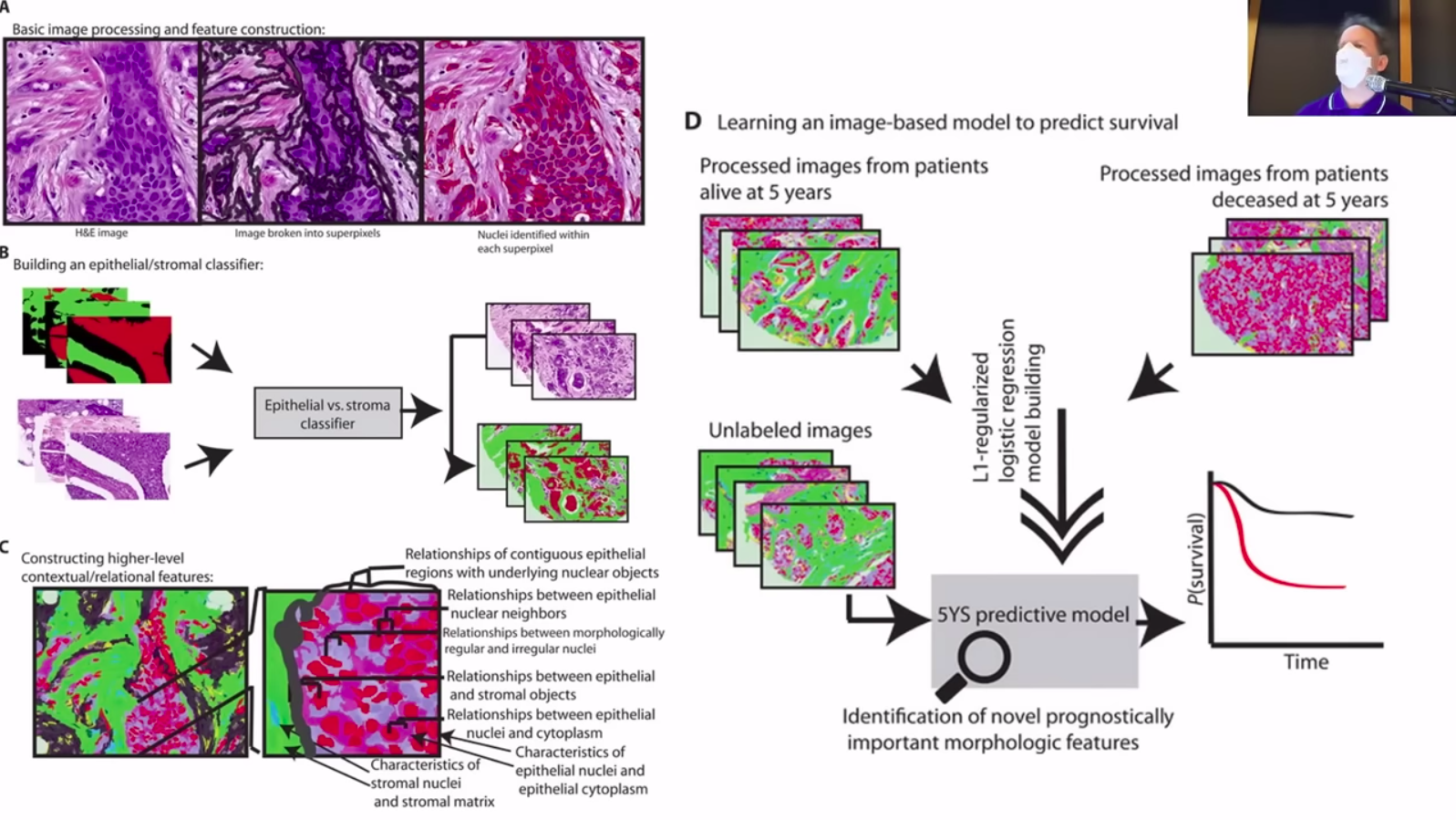

For instance, the picture above is how people used to do image models–hardcoding out all the edge detection and kind of manually crawling through the pixels using an aggregate of simpler algorithms. Gotta respect those old timers!

It was also nice to hear that you don’t need insanely powerful computers or huge datasets to practice DL. As an example, he walks us through a notebook making a “is it a bird or not” detector and you can see you fine tune an existing image recognition model without needing much data of your own (and it’s in the cloud, so you don’t need a GPU.)

I am very excited to learn more about fine tuning. Then I could build all kinds of weird NLP apps, like things that could write custom articles or short stories, or effective ways of summarizing blog articles.

At the start of the last lesson I discovered AI Quizzes, which are a great resource. I added them to my existing Anki decks instead of using the email service, which I recommend.

Lesson 2

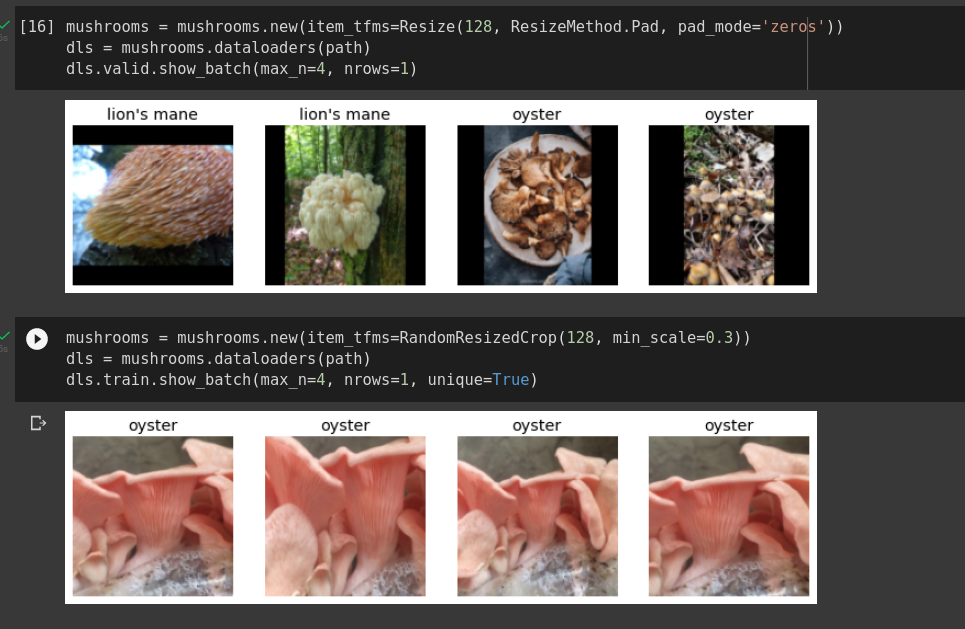

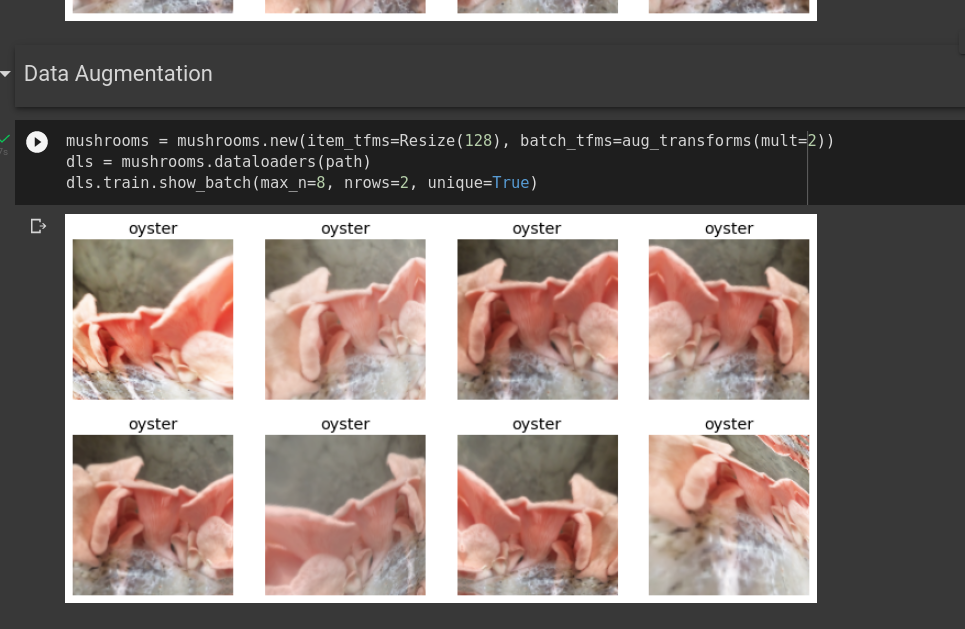

The second lesson was a more involved project. We designed our own DL app from notebook all the way to a deployed GitHub Pages front end. Finagling all the various bits took a while. I like this approach to learning, this long slog through small but challenging particulars. The first one was successfully grabbing images from DuckDuckGo image search. I chose to make a mushroom image classifier, below you can see some of fastai’s data augmentation tools:

The method above resizes, and the method underneath it resizes according to random values. The screenshot below shows the totally randomized data augmentation tool that they end up recommending. Very cool. It’s fun to imagine the neural net learning, in this case, “pink oyster mushroom”-ness in this exaggerated world where it’s a variety of colors, skews, and sizes. The model is boiling it down into some kind of weird, randomized Platonic form. A little trippy.

The method above resizes, and the method underneath it resizes according to random values. The screenshot below shows the totally randomized data augmentation tool that they end up recommending. Very cool. It’s fun to imagine the neural net learning, in this case, “pink oyster mushroom”-ness in this exaggerated world where it’s a variety of colors, skews, and sizes. The model is boiling it down into some kind of weird, randomized Platonic form. A little trippy.

There was a fun little applet inside of fastai that allows for you to relabel incorrect images. It’s part of Jeremy Howard’s motto: before you clean your data, you train a model (this is the reverse of how you usually hear it.)

Anyway, long story short, after much finagling of Hugging Face Spaces and GitHub Pages I managed to deploy my own little mushroom classifier here. Make sure your picture is of an Elm Oyster, a Blue Oyster, a Lion’s Mane, a Shitake, or a Chestnut mushroom. It’ll do a pretty good job of guessing which one it is.

Really loving this class and spending 30min a day on it, six days a week. Going to create some Anki cards from aiquizzes with the material from Lecture 2, then charge ahead into the third Lecture.